Conferences and Publications

Science Advances

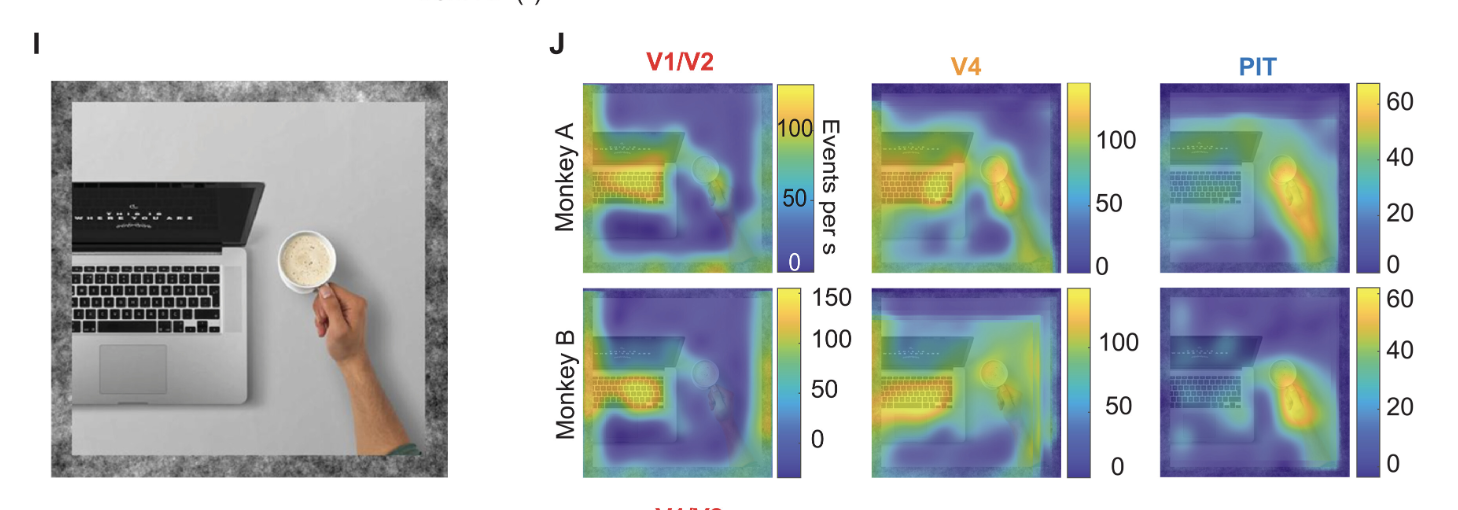

What are the fundamental principles that inform representation in the primate visual brain? While objects have become an intuitive framework for studying neurons in many parts of cortex, it is possible that neurons follow a more expressive organizational principle, such as encoding generic features present across textures, places, and objects. In this study, we used multielectrode arrays to record from neurons in the early (V1/V2), middle (V4), and later [posterior inferotemporal (PIT) cortex] areas across the visual hierarchy, estimating each neuron's local operation across natural scene via "heatmaps." We found that, while populations of neurons with foveal receptive fields across V1/V2, V4, and PIT responded over the full scene, they focused on salient subregions within object outlines. Notably, neurons preferentially encoded animal features rather than general objects, with this trend strengthening along the visual hierarchy. These results show that the monkey ventral stream is partially organized to encode local animal features over objects, even as early as primary visual cortex.

Nature

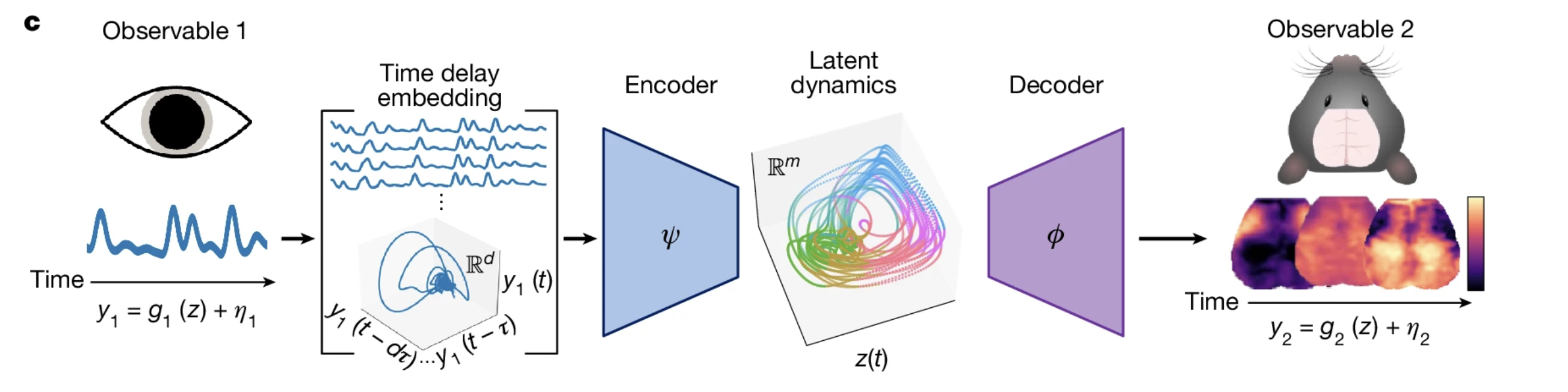

Neural activity in awake organisms shows widespread and spatiotemporally diverse correlations with behavioral and physiological measurements. We propose that this covariation reflects in part the dynamics of a unified, arousal-related process that regulates brain-wide physiology on the timescale of seconds. Taken together with theoretical foundations in dynamical systems, this interpretation leads us to a surprising prediction: that a single, scalar measurement of arousal (e.g., pupil diameter) should suffice to reconstruct the continuous evolution of multimodal, spatiotemporal measurements of large-scale brain physiology. To test this hypothesis, we perform multimodal, cortex-wide optical imaging and behavioral monitoring in awake mice. We demonstrate that spatiotemporal measurements of neuronal calcium, metabolism, and blood-oxygen can be accurately and parsimoniously modeled from a low-dimensional state-space reconstructed from the time history of pupil diameter. Extending this framework to behavioral and electrophysiological measurements from the Allen Brain Observatory, we demonstrate the ability to integrate diverse experimental data into a unified generative model via mappings from an intrinsic arousal manifold. Our results support the hypothesis that spontaneous, spatially structured fluctuations in brain-wide physiology—widely interpreted to reflect regionally-specific neural communication—are in large part reflections of an arousal-related process. This enriched view of arousal dynamics has broad implications for interpreting observations of brain, body, and behavior as measured across modalities, contexts, and scales.

preprint on medRxiv

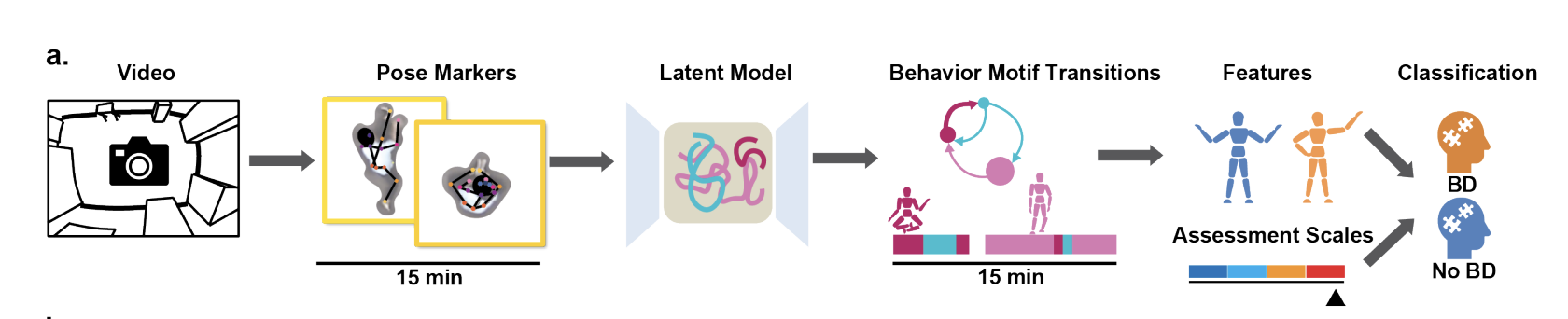

New technologies for the quantification of behavior have revolutionized animal studies in social, cognitive, and pharmacological neurosciences. However, comparable studies in understanding human behavior, especially in psychiatry, are lacking. In this study, we utilized data-driven machine learning to analyze natural, spontaneous open-field human behaviors from people with euthymic bipolar disorder (BD) and non-BD participants. Our computational paradigm identified representations of distinct sets of actions (motifs) that capture the physical activities of both groups of participants. We propose novel measures for quantifying dynamics, variability, and stereotypy in BD behaviors. These fine-grained behavioral features reflect patterns of cognitive functions of BD and better predict BD compared with traditional ethological and psychiatric measures and action recognition approaches. This research represents a significant computational advancement in human ethology, enabling the quantification of complex behaviors in real-world conditions and opening new avenues for characterizing neuropsychiatric conditions from behavior.

Accepted for Computational and Systems Neuroscience (COSYNE)

Free-moving spontaneous behavior is the window to probe the brain and mind. Individuals with neuropsychiatric conditions such as bipolar disorder (BD) can exhibit distinctive patterns of behavior (McReynolds, 1962). Our objective was to quantify free-moving spontaneous human behavior in real-world contexts among euthymic BD individuals and differentiate them from a healthy control (HC) population based on these identified behavioral features. We analyzed videos of 25 BD patients and 25 HC participants freely moving in an unexplored room for 15 minutes (Young et al, 2007). Utilizing a key-point estimation toolbox (Mathis et al., 2018), we extracted human poses and represented them through a latent variable model (Luxem et al., 2022). Clustering the latent representations identified repeated behavioral motifs, revealing unique features of BD aligned with known clinical observations. Our approach outperformed CV models, expert human annotation, and even established clinical assessment scales in distinguishing BD from HC.

Accepted for Computational and Systems Neuroscience (COSYNE)

Undirected behavior reflects cognitive functions and provides insights for diagnosing psychiatric conditions such as bipolar disorder (BD) (McReynolds, 1962). Open-field animal behaviors have been well-studied for this purpose; however, a corresponding human subject paradigm is still lacking, and quantifying complex spontaneous human behaviors is challenging. Here, we demonstrate a semi-supervised model to quantify undirected human behavior, differentiate subtle hallmark behavioral features of BD, and create a natural language generative model to provide nuanced interpretations of behaviors with context information. We found the dwell time of motif "approach, inspect, move along" is significantly lower in the BD population compared with controls (two sample t-test, p-value: 0.04), while no significance was found from manually annotated categories.

Accepted as a spotlight poster for IEEE Brain Discovery Neurotechnology Workshop – Brain Mind Body Cognitive Engineering for Health and Wellness and Society for Neuroscience (SfN)

Research on unsupervised quantification of undirected human behavior for bipolar disorder analysis.

Accepted as a poster for Society for Neuroscience (SfN)

Macaque monkeys are foraging and social animals that spend a significant fraction of their time identifying conspecifics, classifying their actions, and avoiding threats from other animals. This suggests that in learning information from the visual world, many neurons of the monkey ventral stream might focus on encoding animal-based features. We discovered that animal masks identified regions with strong neuronal activity for IT better than they did for V4 and for V4 better than for V1 (AUC values, median ± SE; V1: 0.19 ± 0.01, V4: 0.63 ± 0.06, IT: 0.73 ± 0.08). Collectively, our results provide further evidence of an organizing principle of the monkey ventral stream — to encode information diagnostic of animals.

Accepted as a workshop presentation for Computational and Systems Neuroscience (COSYNE) 2022

Workshop presentation on representations in brains and neural networks.

Accepted as abstract for Society for Neuroscience (SfN) and Bernstein Conference

Are there organizing principles for information encoding along the primate ventral stream? Neurons in each visual area are often tested with different types of stimuli, ranging from simple (e.g., lines in V1) to complex (faces in inferotemporal cortex, IT); however, this strategy limits functional comparisons across areas. By comparing neuronal responses to a given stimulus set across areas, we set out to identify brain-wide organizing principles and determine if these principles are shared by learning-based models of the ventral stream (convolutional neural networks, CNNs).

Washington University in St. Louis, McKelvey School of Engineering, Department of Computer Science Master Thesis Dissertation

Do artificial neurons in CNNs learn to represent the same visual information as the biological neurons in primate brains? Previous studies have shown that the visual recognition pathway (ventral stream) in humans and monkeys increasingly represents animate objects. We used a heatmap attribution technique borrowed from convolutional neural networks to generate biological feature maps identifying regions in scenes that elicit responses from neurons along the ventral stream (V1/V2, V4, and IT). We found that image regions containing animals elicited increasingly larger responses along the ventral stream, while such animacy features are not represented in artificial neural networks.

Shape Recognition in Ultrasound with Deep Learning (2020)

Washington University in St. Louis, McKelvey School of Engineering, Department of Electrical and Systems Engineering Capstone Design Thesis

Ultrasound is one of the most common imaging techniques in clinical settings, but its functionalities are limited by its low resolution and high dependency on the operator skills. Here, we applied a pre-trained neural network to recognize geometric shapes in ultrasound images of 3D-printed samples. We achieved 96% task accuracy and created a database available for future research in ultrasound imaging.

Undergraduate Projects

Internet of Things: Incubator

Embedded Systems & IoT Project

Technologies: Arduino, Photon, Adafruit SI7021, Embedded Systems

Features:

Features:

- Plastic bottles: recycle and reuse

- Hardware: wires, resistors, fan, Adafruit SI7021, Photon, Arduino

- Embedded system: Sensors and Actuators + Feedback Control

- UI: graphical sliders and other information of the incubator

- Networking infrastructure: monitor and control the incubator remotely

Internet of Things: Mini Garage Controller

C++, JavaScript, HTML, Embedded Systems

Technologies: C++, JavaScript, HTML, Photon, Arduino

Features:

Features:

- Hardware: wires, resistors, buttons, LEDs, Two Photons

- Embedded system: Sensors and Actuators + Remote Control

- UI: responsive website and app

- Networking infrastructure: monitor and control the garage light and door remotely

- Functionality: Close the door and turn off the light automatically with the time set

Computer Vision Projects in Python

Computer Vision & Machine Learning

Technologies: Python, OpenCV, TensorFlow, PyTorch

Projects Include:

Projects Include:

- Edge detection + Line detection

- Image Restoration & Optimization

- Photometric Stereo

- Camera Projection and Transformations

- Estimation, Sampling, Robust Fitting

- Epipolar Geometry, Binocular Stereo

- Optical flow

- Neural Networks

- Semantic Vision Tasks

- GAN and VAEs. Unsupervised Learning

Web Development: To-do List

React.js, Web Development

Technologies: React.js, JavaScript, HTML, CSS

Interactive web application for task management with modern React.js framework.

Interactive web application for task management with modern React.js framework.

3D Rotational Robotics in Simulink and Matlab

Matlab, Simulink, Robotics

Technologies: MATLAB, Simulink, Robotics Control Systems

Development of 3D rotational robotics control systems using MATLAB and Simulink for kinematic and dynamic analysis. [\[CODE\]](https://github.com/ZhanqiZhang66/3R-Robotics)

Development of 3D rotational robotics control systems using MATLAB and Simulink for kinematic and dynamic analysis. [\[CODE\]](https://github.com/ZhanqiZhang66/3R-Robotics)

AI Tic-tac-toe and Gomoku Game

C++, Artificial Intelligence

Technologies: C++, AI Algorithms, Game Theory

Implementation of artificial intelligence algorithms for classic board games including tic-tac-toe and Gomoku with intelligent move prediction and strategy optimization. [\[CODE\]](https://github.com/ZhanqiZhang66/AI-Gomuku)

Implementation of artificial intelligence algorithms for classic board games including tic-tac-toe and Gomoku with intelligent move prediction and strategy optimization. [\[CODE\]](https://github.com/ZhanqiZhang66/AI-Gomuku)